So what is distributed consensus?

It is the process of having a set of machines agree upon a common value

Why is distributed consensus an important problem?

If we can get all machines to agree upon some value in a consistent manner, we get resiliency in the face of temporary machine failures and thus higher availability of the system

Why is distributed consensus a challenging problem?

The machines have to agree upon a common value while communicating with each other over an unreliable network; furthermore the system should be able to gracefully handle the failure of a few machines

What do we mean by an unreliable network?

A message sent to a machine may never reach it

A message sent later might arrive before a message sent earlier

A message may be delivered more than once

Are there other factors we should consider?

Machines can be malicious and can try to intentionally mislead the system so it doesn’t achieve consensus

(this is called Byzantine fault tolerance in the literature)

Paxos assumes that this is NOT the case

How many machines are we trying to reach consensus between?

On the order of 5 - 9 machines

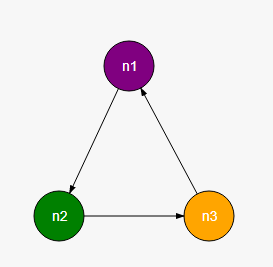

We’ll assume three classes of machines as we derive the protocol

Proposers

Acceptors

Learners

Proposers are responsible proposing values to the acceptors

Clients contact one of the proposers if they want the system to choose a certain value and the proposers in turn try to get that value accepted (we'll not be talking about clients from here on out for simplicity and assume the proposers are trying to get a value suggested by a client accepted by the system)

It’s a good idea to have more than one proposer as the system won’t be functional if there was only one and it crashed and was down for a while

Let’s pick two as we derive the protocol

Acceptors are responsible for accepting (or rejecting) proposals from proposers while ensuring that the complete set of acceptors doesn’t accept conflicting values

It’s good to have more than one acceptor as the system won’t be functional if there was only one and it crashed and was down for a while

Let’s pick two as we derive the protocol

Learners learn what the set of acceptors have chosen as a whole

Let’s pick just one learner as we derive the protocol for simplicity

Let’s only deal with the unreliable network at first as we come up with the protocol and assume that no machine can go down

We’ll extend this simpler algorithm to handle machine failures later

What’s the simplest algorithm we can think of?

How about each proposer sends an “accept” message to all acceptors and each acceptor accepts the first message it receives?

What can possibly go wrong? ;)

What went wrong?

Both the proposers sent the accept messages at about the same time and since we couldn’t guarantee that the accept message for any one proposer will reach all acceptors before the other, chaos ensued

How can we fix this?

One option is for a proposer to first send a promise message to all acceptors and make them promise that they won’t accept any other acceptors accept message before it sends its accept message

This may work

How will the proposer identify itself in its promise message?

We can use the IP address of the proposer

Or better yet, we can identify the “proposal” instead of the proposer thus allowing a proposer to send multiple proposals

This gives us more flexibility and isn’t any more complicated so let’s go with this approach

Each proposal can be identified by a pair [sequence number, proposer name]

So the first proposal sent by p1 can be [1, p1]

If p1 ever decides to send a second proposal, that can be named [2, p1] etc

etc

So we bumped into a similar problem as before

The promise messages reached the acceptors in an inconvenient order just like the accept messages had reached earlier

This was actually quite predictable and we should have seen this coming

Since acceptors might receive promise messages from multiple proposers, they will need to order them somehow so they accept one or the other but not both

We can achieve this by defining the “greater than” operator on proposal numbers; an acceptor only promises to accept a proposal if its proposal number is the highest one it has seen

[2, p1] > [1, p1] (the sequence number of first is greater, proposer name doesn’t matter)

[1, p2] > [1, p1] (if the sequence numbers are same, we break the tie using proposer name)

etc

Let’s run this refined algorithm where a proposer first gets its promise(proposal-number) message acknowledged by all acceptors and only then sends its accept message

An acceptor only promises a proposal if it has the highest proposal number it has yet seen

Good

We got a successful run

There is still a problem lurking here

Can you think of it?

Let’s take a pause

Things are starting to get interesting and the state can evolve in multiple ways here

Alternative #1

p1’s accept message can reach both acceptors before p2’s promise message reaches either

Alternative #2

p2’s promise message can reach both acceptors before p1’s accept message reaches either

Alternative #3

This is the most interesting alternative

p1’s accept message reaches one acceptor while p2’s promise message reaches the other

So far the proposers have been competing to get their values chosen.

What if they co-operated instead of competing?

After all, the purpose of the protocol is to get "some" value chosen and not necessarily "my" value chosen

What if p2 changed its mind and tried to get blue accepted instead of green if it learned that some acceptor has already accepted blue?

Will this work?

Will this work in all situations?

There are three logical alternatives

p1's accept message reaches all acceptors before p2's promise reaches any

(we are good in this case)

p2's promise message reaches all acceptors before p1's accept reaches any

(we are good in this case)

p1's accept reaches some acceptors while p2's promise message reaches others

(let's think this through)

If p1's acccept message reaches some acceptor, even if just one acceptor, we know that p2 is bound to learn about it as it sends the promise message to all acceptors

Thus p2 is bound to change its mind as long as even a single acceptor has already accepted a value

This worked!

We cheated.

Just a little.

But a1 did accept the higher numbered blue value sent by p2

What if p2 had sent green instead?

a1 had no choice but to accept that as well

If p2 hadn't changed its mind to try and get blue accepted instead of green, there is no guarantee that yet another proposer (say, p3) could later come along and get its even higher numbered orange value accepted instead

This cycle would never end

p2 changing its mind was thus critical for the correctness of the protocol

Acceptors can still accept a new value after having already accepted some value but the proposers make sure the new value they ask them to accept is the same as the old one (just with a different and higher numbered proposal)

Congratulations!

We have derived the Paxos protocol assuming no machine goes down

Machines do go down however

And what’s the point of a distributed consensus if we can’t tolerate machine failures?

The immediate next question is:

How many machine failures can we tolerate?

Intuitively, we should be able to function fine as long as the majority of the machines are up

What’s the smallest majority? 51%

As long as 51% of the machines are up, the protocol should be able to make forward progress

The machines which crashed can later come up and should be able to participate again without affecting the correctness of the protocol

If we want to tolerate f failures, we should have 2f+1 machine failures (always an odd number)

3 machines tolerate 1 failure (as smallest majority is 2)

5 machines tolerate 2 failures (as smallest majority is 3)

2f+2 machines can still only tolerate f number of failures so having an even number of machines wastes an extra machine

4 machines still tolerate 1 failure (as smallest majority is 3)

6 machines still tolerate 2 failures (as smallest majority is 4)

Any two majorities always have at least one node in common

Let’s consider three acceptors a1, a2, a3 - there are three possible smallest majorities

a1, a2

a2, a3

a1, a3

(Pick any two of the above - they always have a machine in common)

Let’s derive the protocol for when some machines can go down

Let’s consider two proposers as before (p1 and p2) but five acceptors (a1 - a5) and one learner as before

We’ll work towards tolerating the failure of at most two acceptors

Since both the proposers have to talk to the smallest majority, they must talk to at least one common node (p3 in our example)

There are two ways the situation can evolve

Alternative # 1

a3 receives p1’s promise message, acks it and then receives p2’s promise message leading it to discard a1’s subsequent accept message

Alternative # 2

a3 can receive p1’s promise message, ack it and receive p1’s accept message all before it receives p2’s promise message

We have seen this play out before - p2 will cooperate in this case and change its mind about which color to get chosen

This shouldn’t have been surprising

Turns out the rules we derived for the case when no machine goes down work pretty well in general assuming we wait to hear back from at least a majority of acceptors

Since a proposer doesn’t have to contact every acceptor, it’s possible that it can hear back from multiple acceptors each of which has accepted a different value

In this case, it changes its mind and tries to get the accepted value with the highest proposal-number accepted by the majority

We saw that a proposer can get acks with multiple accepted values if it only waits to hear back from majority

It changes its mind and gets the highest numbered value accepted from among the set of values it receives

This is it

This is the complete Paxos protocol for choosing a single value among a set of machines while tolerating f machine failures

Choose a globally unique proposal number and send promise(proposal-number) messages to all acceptors.

Wait to hear an acknowledgement from smallest majority.

If none of the received acknowledgements contain an already accepted value, feel free to send accept messages with the value of your choice.

If any of the received acknowledgements contain an already accepted value, pick the one with the higher proposal number and get that accepted instead of your own value.

When you receive a promise message, always acknowledge it if has the highest proposal number which you have seen; if the proposal number is lower than the one you had earlier acknowledged, feel free to not respond or send a negative acknowledgement

Acknowledge it even if you have already accepted another value but include your accepted value and its corresponding proposal number in your acknowledgement

When you receive an accept message, accept it if you had earlier acknowledged its proposal number which came through the promise message; also notify all the learners that you just accepted a value along with its proposal number

When you recieve a message from an acceptor informing you they accepted a value (along with its proposal number), remember it.

When you have received messages for the same proposal number from the smallest majority, you know the value the Paxos protocol chose.

There is typically only one proposer as it’s counter-productive to have more than one proposer proposing in a race

The distinguished proposer (aka leader) can be chosen from the set of machines using Paxos itself (think of the “value” being chosen as who will be the leader)

The leader also serves as the distinguished learner and conveys the values it learns to other nodes instead of all of them learning by receiving accepted messages

We have seen how Paxos can be used to choose a single value (but only a single value) in a consistent manner

In any real system, we will want to choose many different values

Before moving forward, let's take a step back and talk about replicated state machines

We'll then see how we can use Paxos (or more precisely, Multi-Paxos) to implement a replicated state machine

A replicated state machine is that same state machine but replicated across multiple computers for better fault tolerance and higher availability

This allows us to view a collection of machines as one logical machine which has a higher uptime than any of them combined

Each command is now executed on each replica of the state machine instead of at just one computer

As an important side note, command execution should be deterministic as a given command is executed multiple times, once for each replica

Each machine maintains a list of input commands and the system runs an instance of Paxos in order to choose which command to execute for position i

Since we run multiple instances of Paxos, one for each slot of the command list, this technique is called Multi-Paxos

To make things concrete, let's consider a system with three nodes (which we know can tolerate a single machine failure) which has accepted and run two commands

n2: [ c1, c2 ]

n3: [ c1, c2 ]

Each node has learned through two rounds of Paxos that the command for 1st position is c1 and 2nd position is c2

When a client wants to execute a new command (c3), it contacts the distinguished proposer (aka leader) which in turn starts a round of Paxos to get the value for the 3rd position in the list chosen

Each machine executes the command c3 as it accepts and learns that the system has chosen it as the value for the 3rd position in the list

n2: [ c1, c2, c3 ]

n3: [ c1, c2, c3 ]

Now assume the client issues c4, two nodes accept and learn it (while third node hasn't yet received all the messages)

n2: [ c1, c2, c3, c4 ]

n3: [ c1, c2, c3 ]

The system can move ahead as it can tolerate the failure (or slow down) of a single node

The client can then issue command c5 and this command can reach all the nodes (including n3)

n2: [ c1, c2, c3, c4, c5 ]

n3: [ c1, c2, c3, ??, c5 ]

n1 and n2 are free to execute c5 but n3 will have to wait to hear the value for the 4th slot before it's able to execute c5

Since n3 knows c5 has been chosen, it knows the value for the 4th slot must have been chosen by the majority

n2: [ c1, c2, c3, c4, c5 ]

n3: [ c1, c2, c3, ??, c5 ]

If the network dropped messages to n3, it can always start a dummy proposal round for the 4th slot and one of the nodes in the majority which accepted will reply back with the command it accepted for that slot

Once n3 learns the value for the 4th slot, it can execute both c4 and c5

n2: [ c1, c2, c3, c4, c5 ]

n3: [ c1, c2, c3, c4, c5 ]

Let's assume n3 gets unlucky and crashes while n1 and n2 continue to make progress

n2: [ c1, c2, c3, c4, c5, c6, c7, c8 ]

n3: [ c1, c2, c3, c4, c5 ]

When n3 comes back up, it suddenly learns the value for the 9th slot and realizes it missed all the updates in between

n2: [ c1, c2, c3, c4, c5, c6, c7, c8, c9 ]

n3: [ c1, c2, c3, c4, c5, ??, ??, ??, c9 ]

n3 can start dummy proposal messages for the slots which it missed and learn the value chosen for those slots from one (or more) nodes in the majority which accepted values for those slots

n2: [ c1, c2, c3, c4, c5, c6, c7, c8, c9 ]

n3: [ c1, c2, c3, c4, c5, c6, c7, c8, c9 ]